Google’s SEO Process: A Comprehensive Overview

Google’s search engine uses a multi-stage process to find, understand, and rank content from across the web. This report breaks down each stage of that SEO process and examines key technical details, ranking factors, algorithm updates, best practices, the influence of AI, and emerging SEO trends. Each section includes examples and references to authoritative sources for clarity.

1. Complete SEO Process: Crawling, Indexing, Ranking & Serving

Crawling: Google’s journey starts with crawling – discovering new and updated pages. Google’s web crawler, Googlebot, traverses the web by following links and sitemaps to find content. It “fetches” page URLs and downloads their HTML, images, and other resources (Crawling, indexing and ranking: Differences & impact on SEO) (Crawling, indexing and ranking: Differences & impact on SEO). Crawling is like a librarian scouring books: it is the foundation for building the search index (How Search Engines Work: Crawling, Indexing, Ranking, & More). Because crawling the entire web is resource-intensive, Google uses algorithms to decide which sites to crawl, how often, and how many pages per site (How Search Engines Work: Crawling, Indexing, Ranking, & More). Well-structured sites with clear navigation, updated content, and XML sitemaps tend to get crawled more efficiently. Webmasters can assist crawling by providing sitemaps and using robots.txt to guide the crawler (How Search Engines Work: Crawling, Indexing, Ranking, & More). Large sites also need to be mindful of crawl budget, the number of pages Googlebot is willing or able to crawl – an aspect discussed more in the technical section below.

Indexing: After crawling a page, Google indexes it by processing its content and storing it in Google’s vast search index (a database of web pages). Indexing involves analyzing the text, images, and other media on the page to understand what it’s about (Crawling, indexing and ranking: Differences & impact on SEO). Google will parse the HTML, extract keywords and metadata, note the freshness of content, and follow links on the page to discover more pages. Modern indexing also includes rendering the page (executing JavaScript) so that content loaded dynamically is included (more on rendering later). Not every crawled page is indexed – if Google deems a page low-quality or duplicate, it might choose not to index it. But those that are indexed are added to Google’s “library” of web pages, which now consists of hundreds of billions of pages. It’s important to ensure a site’s important pages are crawlable and indexable (not blocked by robots.txt or meta noindex tags) so they can appear in search results (Crawling, indexing and ranking: Differences & impact on SEO). Google’s indexing systems have evolved (for example, the Caffeine indexing update allowed Google to add content to the index continuously on the fly). Today, mobile-first indexing is used – meaning Google predominantly indexes the mobile version of pages. In fact, as of 2023 Google completed its rollout of mobile-first indexing for virtually all sites, indexing pages “from the eyes of a mobile browser” for ranking (Google says mobile-first indexing is complete after almost 7 years). This ensures that websites optimized for mobile (in content and structure) are prioritized in the index.

Ranking: When a user enters a search query, Google’s algorithms rank the indexed pages to find the most relevant results. Essentially, Google retrieves from its index all pages that might match the query, then orders them by relevance and quality using hundreds of ranking signals. Google’s systems “search the index for matching pages and display the results they believe have the highest quality and relevance” (Crawling, indexing and ranking: Differences & impact on SEO). What determines relevance? Many factors – including the page’s topic and content (does it contain the keywords and answer the query?), the page’s authority (links from other sites), the user’s context (location, language, device), and more (Crawling, indexing and ranking: Differences & impact on SEO). For example, a search for “baker” will show different results depending on if the user is in Paris or Hong Kong, and likely surface local bakeries, whereas “how to become a baker” will show more general guides – Google adjusts results based on context and intent (Crawling, indexing and ranking: Differences & impact on SEO). The exact ranking algorithms are proprietary, but we know they consider hundreds of signals (Google has mentioned content relevance, backlinks, page experience, and many others as key factors – discussed in detail later). The ranking stage may also involve producing “snippets” – the title and description shown for each result – and selecting any special result features (like images, news, or rich snippets) to display.

Serving Results: The final step is serving the results to the user. This is where Google’s search engine results page (SERP) is generated and delivered. After ranking the top results, Google assembles the SERP, which may include text snippets, URLs, dates, images, or rich result features (such as star ratings or FAQs if structured data is present). The focus in serving is delivering the results quickly and accurately. Google has multiple data centers globally, and typically it will serve results from the location nearest to the user for speed. The “serving” stage also involves personalizing results as needed (for instance, accounting for the user’s language or past search history if appropriate) and applying any safe-search or policy filters. In short, serving is taking the ranked list of relevant pages and presenting it in the useful format we see on Google’s results page. It’s the culmination of the process – crawling finds the content, indexing catalogs it, ranking orders it, and serving delivers the answer (How Search Engines Work: Crawling, Indexing, Ranking, & More).

In summary, Google’s SEO process consists of: crawling the web for content, indexing that content into Google’s database, ranking content in response to a query using many factors, and serving the results in the search page. If any of these stages fail (e.g., a page isn’t crawled or indexed), that page cannot appear in search. Site owners aiming for Google visibility need to ensure their content can be crawled and indexed, and optimize factors that influence ranking and result display.

2. Technical SEO Details: Crawling Budget, Structured Data, Indexing & Rendering

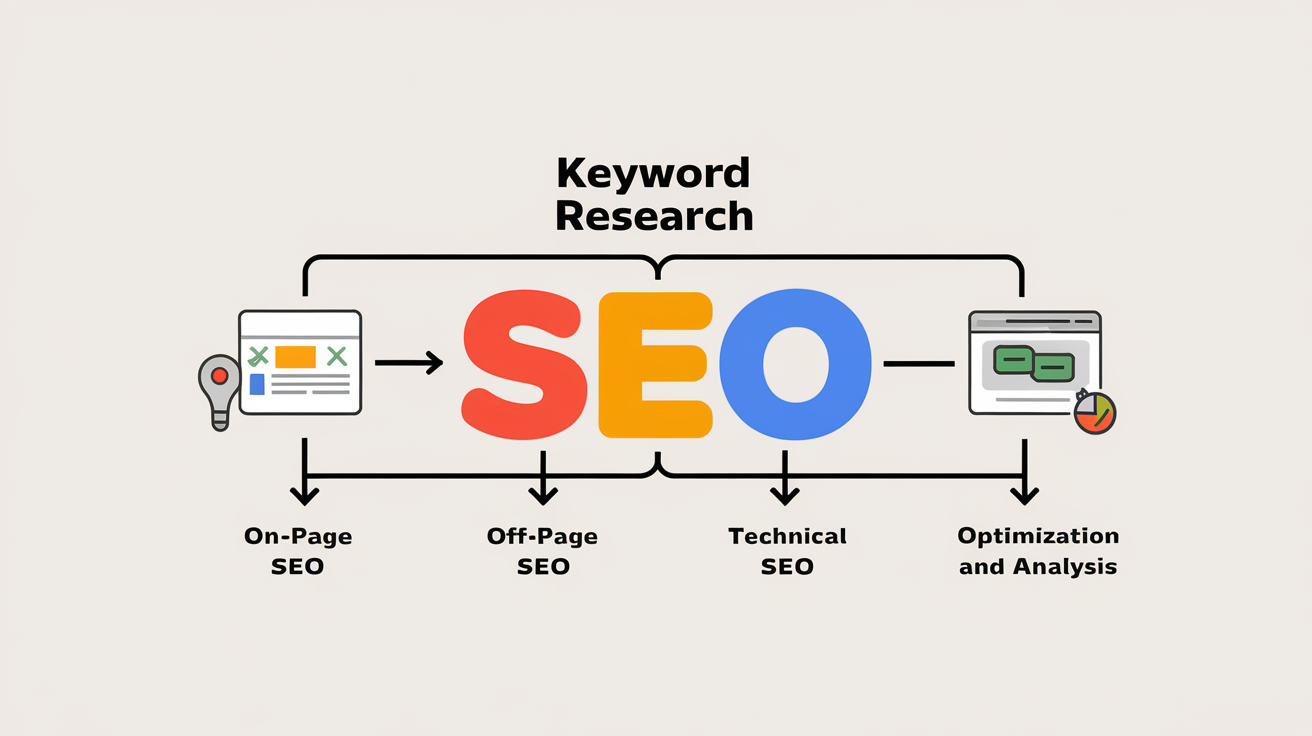

Modern SEO isn’t just about keywords – technical considerations can make or break a site’s search performance. Key technical aspects include managing crawl budget, using structured data, understanding indexing behaviors (like mobile-first indexing), and ensuring your site’s content (including JavaScript) can be properly rendered and indexed by Google.

Crawl Budget: Crawl budget is the concept of how many pages Googlebot can and wants to crawl on your site within a given time frame (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget). For small websites, crawl budget isn’t usually a limiting factor; Google will crawl most or all pages eventually. But for very large sites (with tens of thousands or millions of pages) or sites that update very frequently, crawl budget becomes important. Google defines crawl budget as “the number of URLs Googlebot can and wants to crawl” based on two factors: crawl rate limit and crawl demand (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget) (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget).

- Crawl rate limit is Google’s way of not overwhelming your server – it’s the maximum fetching rate Googlebot will use. If a server is fast and responding well, the limit goes up; if it slows down or errors, Googlebot slows down. Site owners can also throttle Googlebot in Search Console. Essentially, it’s “the number of simultaneous parallel connections Googlebot may use to crawl, and the time between fetches” (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget).

- Crawl demand depends on how much Google wants to crawl your pages. This demand is higher for popular pages (that get more traffic or have more links) and for content that changes often or is new (to avoid content getting “stale” in the index) (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget). If a site has many pages that are low-value or duplicate, the demand to crawl those is low.

Google combines these to allocate crawl budget. Practically, this means large sites should optimize so that Googlebot focuses on their important pages. Avoid creating thousands of low-quality or duplicate pages (which waste crawl budget), fix broken links or infinite URL loops, and consolidate duplicate content via canonical tags. Things like faceted navigation, session IDs in URLs, or endless calendar pages can create a crawl trap – Google lists faceted navigation, duplicate content, soft error pages, hacked pages, infinite URL spaces, and low-quality/spam content as factors that negatively impact crawl budget (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget). On the flip side, a well-structured site with clean URLs, good internal linking, and XML sitemaps can guide Googlebot efficiently. Google also stresses that crawl rate (frequency) is not a ranking factor – crawling more doesn’t mean higher ranking, it just means those pages are being discovered (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget). But ensuring your key pages are crawled (and not wasting Googlebot’s time on trivial pages) helps those pages get indexed and available to rank.

Recent update: In late 2024, Google updated its documentation to emphasize mobile and desktop consistency for crawl budget. Large websites with separate mobile sites should maintain the same link structure on mobile as on desktop; if the mobile site omits links that are on desktop, Googlebot (which primarily crawls mobile version) might miss pages, slowing down discovery (Google Updates Crawl Budget Best Practices). This underscores that for mobile-first indexing, your mobile site needs to contain all critical content and links from the desktop site – or risk those pages not being crawled or indexed.

Structured Data: Structured data is a standardized format (often JSON-LD markup in HTML) that provides explicit clues to search engines about a page’s content. Google uses structured data to better understand what is on the page and to enable special search result features. For example, adding Schema.org markup for a recipe (with fields for ingredients, cooking time, calories, etc.) helps Google understand that the page is a recipe and makes it eligible to appear as a rich recipe result with star ratings and cook time (Structured data and SEO: What you need to know in 2025) (Structured data and SEO: What you need to know in 2025). As Search Engine Land notes, structured data “helps search engines understand [page content] more effectively” and Google uses it to create enhanced listings and rich results on SERPs (Structured data and SEO: What you need to know in 2025) (Structured data and SEO: What you need to know in 2025). Common schema types include Articles, Products, Reviews, FAQs, Events, etc. By implementing appropriate schema markup, a site can gain rich snippets (like review stars, FAQ dropdowns, breadcrumbs, site search box, etc.), which increase visibility and click-through rates. Structured data doesn’t directly boost rankings for the page content, but the improved understanding and rich presentation can indirectly benefit SEO by drawing user attention. It’s increasingly seen as an SEO best practice rather than an optional nice-to-have – as of 2024, structured data is considered “an essential part” of SEO strategy for improving visibility (Structured data and SEO: What you need to know in 2025) (Structured data and SEO: What you need to know in 2025). Webmasters should ensure their structured data is correctly implemented (using Google’s Rich Results Test) because errors can prevent eligibility for rich results (Structured data and SEO: What you need to know in 2025). In short, structured data speaks Google’s language, allowing it to better interpret and present your content – critical for standing out in an AI-driven, feature-rich search results page.

Indexing Methods (Mobile-First & More): Google’s indexing has shifted to a mobile-first approach. This means Google primarily indexes the mobile version of websites rather than the desktop version. By 2019, mobile-first indexing was enabled for new sites, and as of 2023 Google announced the shift is complete for the whole web (Google says mobile-first indexing is complete after almost 7 years). For SEO, this means your mobile site is crucial – any content or link not present on mobile might not be indexed or considered for ranking. Ensuring parity between desktop and mobile content is essential. Also, technical aspects like using responsive design or dynamic serving correctly affect indexing. Beyond mobile-first, note that Google doesn’t index every page it crawls. It makes decisions in the indexing pipeline: pages with thin or duplicate content might be indexed partially or not at all. Using canonical tags for duplicate pages helps consolidate indexing. If a page is crawled but not indexed (a status you can see in Google Search Console), it’s often a sign that Google wasn’t convinced the page is unique or valuable enough to index relative to other similar content. Indexing can also be influenced by technical factors: for instance, pages blocked by robots.txt won’t be crawled (and thus not indexed), and pages with a meta noindex tag will be dropped from the index if seen. Another important aspect is that Google’s index now blends different content types – web pages, PDFs, images, videos, and even some structured data (like job postings) all co-exist. Using the proper HTML tags and sitemaps for images or videos can help those get indexed too.

Rendering (JavaScript & Dynamic Content): In the past, one SEO challenge was that content loaded via JavaScript might not get indexed, because crawlers historically had difficulty processing JS. Google has significantly improved in this area. Googlebot now uses a headless Chromium browser to render pages almost like a regular user’s browser would. In fact, Google confirms it “renders all HTML pages” for indexing, even JavaScript-heavy sites (Google Renders All Pages For Search, Including JavaScript-Heavy Sites) (Google Renders All Pages For Search, Including JavaScript-Heavy Sites). During indexing, Google’s system will queue pages for rendering after initial HTML fetch, and execute the JavaScript to see any additional content or links. Zoe Clifford from Google’s rendering team explained that rendering means “running a browser in the indexing pipeline” so Google can index the fully loaded page as a user would see it (Google Renders All Pages For Search, Including JavaScript-Heavy Sites). This is resource-intensive (“expensive,” as Google says) but Google has committed to it to ensure they don’t miss important content that relies on JS (Google Renders All Pages For Search, Including JavaScript-Heavy Sites). In 2019, Googlebot was upgraded to an “evergreen” version – meaning its rendering engine is regularly updated to the latest Chrome version, keeping up with modern web features (Google Renders All Pages For Search, Including JavaScript-Heavy Sites).

For SEO, this means most content from modern web apps can be indexed, but there are still best practices. Extremely complex or slow-loading scripts might delay indexing. Google will not wait forever for a script – if your page requires a user interaction or long time to fetch data, that content might not be seen. Thus, techniques like server-side rendering or hydration (rendering important content on the server, to deliver it in the initial HTML) can help search engines see critical content faster. Google has advised not to lazy-load all content; critical text should not depend on a user scroll or click. Also, while Googlebot can execute JS, other search engines might not be as advanced – so a progressive enhancement approach is prudent. Still, the bottom line is that Google can index client-side rendered content now. If you want to be sure, you can use Google’s Search Console “URL Inspection” tool or the Rich Results Test to see a page’s rendered HTML. If Google’s rendered view misses content, consider adjusting your methods.

One caveat: Google only renders HTML content. Non-HTML content types like PDFs are indexed differently (no rendering). And Googlebot does not run external resources that are disallowed (e.g., if your JS file is blocked by robots.txt, Googlebot won’t fetch it, possibly missing content). So, ensure you don’t block important JS or CSS files. Also, remember that rendering happens after initial crawl, so very heavy JS sites might see a slight lag between being crawled and fully indexed. But as of 2024, Google’s Martin Splitt has indicated that if it’s HTML, “we just render all of them” for indexing (Google Renders All Pages For Search, Including JavaScript-Heavy Sites) – a reassuring development for JS-driven sites.

3. Ranking Factors & Algorithm Updates

Google’s ranking algorithm uses an array of factors to determine which pages appear first. While Google keeps the exact “recipe” secret, SEO experts and Google’s own communications have identified many important ranking factors. Over the years, Google has also introduced major algorithm updates to improve search quality – some targeting specific spam tactics, others leveraging AI to better understand queries. This section highlights the major ranking factors and a timeline of significant Google algorithm updates.

Major Ranking Factors in Google’s Algorithm

- High-Quality, Relevant Content: Content is often cited as the #1 factor. Google aims to reward pages that best answer the user’s query. This goes beyond just having the keywords – it’s about depth, accuracy, and freshness of information. In 2016, Google confirmed that “content” (along with links) is one of the top two ranking signals (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain) (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain). High-quality content means it is original, comprehensive, and provides value to users. Google’s Panda algorithm (2011) specifically targeted thin or low-quality content, reinforcing that pages with little substance, or duplicate content copied from elsewhere, will rank poorly. Google also uses human quality raters to evaluate search results, and from those Search Quality Guidelines came the concept of E-A-T: Expertise, Authoritativeness, Trustworthiness. While E-A-T isn’t a direct algorithm, it informs what “good content” looks like. Content produced by expert sources, with authoritative knowledge and trustworthy credentials, tends to perform better – especially for “Your Money or Your Life” topics (like health or finance) where accuracy is critical (A Complete Guide to the Google Panda Update: 2011-21) (A Complete Guide to the Google Panda Update: 2011-21). In short, content that satisfies the user’s intent is crucial. Modern SEO strategies emphasize creating in-depth content that matches what the searcher is looking for, rather than just stuffing keywords (which Google can now easily detect and demote).

- Backlinks (Authority and PageRank): Google’s original innovation, PageRank, evaluated a page’s importance by the quantity and quality of other pages linking to it. Backlinks remain a core ranking factor. A link to your page from a reputable site is seen as a vote of confidence. Not all links are equal – a few links from high-authority, relevant sites carry far more weight than dozens of low-quality links. In fact, manipulating backlinks became a common spam tactic, which led Google to launch the Penguin update (2012) to combat link spam (discussed below). Today, Google’s algorithms evaluate link patterns carefully. Earning natural, editorial links through great content or useful tools is the safest way. In 2016, Google’s search strategist said links (along with content) are one of the top ranking signals in the algorithm (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain) (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain). Healthy link profiles (diverse sources, relevant context, no buying/selling of links) help establish a site’s authority. Internal links within your site also help distribute PageRank and signal which pages are most important (e.g., your homepage linking to a subpage tells Google that subpage is important). A solid SEO practice is to audit your backlink profile to remove or disavow spammy links and to continuously seek genuine backlinks via content marketing, PR, or partnerships.

- RankBrain and Semantic Relevance: In 2015, Google introduced RankBrain, a machine-learning AI system that helps process search queries. RankBrain is essentially an AI-based ranking component that can interpret queries and page content in a more human-like way. It looks at words and phrases and tries to understand their relationships and concepts, even if it’s a query Google hasn’t seen before. For example, if someone searches “what’s the title of the consumer at the highest level of a food chain,” Google’s systems (with RankBrain’s help) figure out this refers to the concept of an “apex predator,” even though the query didn’t use those words (How AI powers great search results) (How AI powers great search results). This ability to connect synonyms or implicit meaning means pages can rank for queries even if they don’t contain the exact keywords, as long as Google deems them relevant. RankBrain essentially adjusts ranking by analyzing how well results satisfy users for unfamiliar or long-tail queries. Google also said RankBrain became one of its top 3 ranking signals within a year of launch (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain) (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain). For SEO, this underscores the importance of natural language content and covering topics in a semantically rich way – focusing purely on exact-match keywords is less important than covering the intent and related concepts of a query.

- On-Page Optimization (Keywords & HTML Elements): Traditional on-page SEO factors still matter. This includes using the target keywords in critical places – like the page title tag, headings (H1, H2), and naturally throughout the content – so that Google can easily tell what the page is about. Optimizing title tags is especially important, as it not only helps rankings but also is the headline users see on the SERP. Each page should have a unique, descriptive title tag that incorporates primary keywords (while still reading naturally) (10 SEO Best Practices to Help You Rank Higher) (10 SEO Best Practices to Help You Rank Higher). Similarly, a well-written meta description can improve click-through (though meta descriptions aren’t a direct ranking factor, they influence user behavior). Other on-page factors include URL structure (clean, descriptive URLs can provide a minor SEO benefit and better UX), image alt text (helps with image search and accessibility), and using schema markup as described. While Google is smart about synonyms, including relevant keywords and variations in your content still helps with relevance – just avoid overdoing it. Keyword stuffing (cramming keywords excessively) is considered a form of spam and can hurt rankings (Google Launches “Penguin Update” Targeting Webspam In Search Results). The best practice is to use keywords in a way that feels natural and helpful to the reader (10 SEO Best Practices to Help You Rank Higher).

- User Experience & Engagement: Google increasingly measures how users interact with search results and web pages, and incorporates that into rankings. This includes things like click-through rate (CTR) from search results, dwell time (how long a user stays on the page, which indicates if the content was useful), and bounce rate (if a user quickly bounces back to search results, perhaps the page wasn’t what they wanted). While Google is cautious about admitting direct user behavior metrics in the algorithm, it does use proxy signals of good user experience. In 2021, Google rolled out the Page Experience Update which includes Core Web Vitals – metrics for loading speed, interactivity, and visual stability – as ranking factors. Sites that load quickly, are stable (no content shift), and respond fast to input get a small ranking boost as they provide a better UX (The Future of SEO: Trends to Anticipate in 2025). Mobile usability is another aspect – since most users are on mobile, Google downranks pages that aren’t mobile-friendly (this was the rationale behind the 2015 “Mobilegeddon” update that gave mobile-friendly pages a boost). Secure and safe browsing is also important: having HTTPS is a lightweight ranking factor (secure sites are preferred in results), and Google may downrank or warn if a site has malware or isn’t safe for users. Overall, Google wants to reward sites that users find satisfying. A smooth, intuitive website that answers questions, loads fast, and is accessible on all devices will fulfill a user’s needs and thus earn higher rankings. In short, great content must be delivered with great UX. This also ties back to E-A-T: a positive reputation, clear authorship, and site security build trust (the “T” in E-A-T), which is crucial especially in sensitive topics.

- Localization and Personalization: While not exactly “universal” ranking factors, it’s worth noting Google customizes results heavily based on context. A user’s location will trigger local ranking factors – for example, Google might rank a less “authoritative” site higher if it’s a local business near the user. For local queries, factors like Google My Business listing info, reviews, and proximity come into play. Personalization (like search history) plays a relatively minor role, but Google might adjust results if it knows, for instance, that a user frequently clicks on a certain domain. For most SEO work, you assume a generic user, but be aware that there isn’t one single “ranking” for a query – it can vary by location, device, and other context signals (Crawling, indexing and ranking: Differences & impact on SEO).

These are just some of the major factors. Google has confirmed it uses “hundreds of signals” in its algorithms (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget), and the weight of factors can also vary by query. For example, for a query like “latest news”, freshness of content is very important; for “how to tie a tie”, a highly authoritative how-to with a good video might win. But as a rule of thumb, quality content and quality backlinks remain fundamental, enhanced by technical optimizations and user-centric improvements.

Key Google Algorithm Updates (Panda, Penguin, BERT & more)

Over the years, Google has named and launched many significant algorithm updates that SEOs track closely. Below is a summary of some of the most important updates and what they changed:

- Google Panda – February 2011. Panda was a major update aimed at elevating quality content and demoting “content farms” and other low-quality sites. It introduced a new way for Google to assess site quality at scale, reportedly using machine learning to approximate human quality ratings. The initial Panda release had a huge impact – about 12% of all search queries were affected (A Complete Guide to the Google Panda Update: 2011-21), making it one of the widest impact updates ever. Sites with thin content, high ad-to-content ratio, or that just aggregated info from elsewhere saw drops. Panda essentially became part of Google’s core algorithm, continually working in the background to filter out shallow or spammy content. Recovery from Panda required significantly improving content across the site (for example, one case study involved rewriting or removing 100+ low-quality pages to recover rankings) (A Complete Guide to the Google Panda Update: 2011-21). Panda also pushed the importance of E-A-T and user satisfaction – since then, creating unique, valuable content has been imperative for SEO.

- Google Penguin – April 2012. Codenamed Penguin, this update targeted webspam, specifically manipulative link practices. Upon launch, Google said Penguin would impact ~3% of queries (in English) (Google Launches “Penguin Update” Targeting Webspam In Search Results). Its goal was to catch sites that had been cheating the ranking system through tactics like buying links, participating in link schemes, or excessive exact-match anchor text in backlinks. Sites with unnatural link profiles (e.g., thousands of links from low-quality sites or obvious link networks) were hit with ranking penalties. Penguin made SEO practitioners shift focus from quantity of links to quality of links. Over the next few years, Google updated Penguin several times and eventually integrated it into the core algorithm in 2016, at which point it started working in real-time (assessing links continuously). The takeaway from Penguin was clear: earning links naturally is the only safe strategy – attempts to spam or manipulate links can result in severe ranking demotions or deindexing. Google even provides a Disavow Tool for webmasters to distance themselves from bad links in the post-Penguin world.

- Hummingbird – August 2013. Google’s Hummingbird update was a complete overhaul of the core search algorithm, aimed at better understanding the meaning behind queries. It was described as the biggest rewrite since 2001 (Google's Hummingbird Update: How It Changed Search). Unlike Panda/Penguin which were tweaks to ranking factors, Hummingbird re-engineered how Google parses searches. It enabled more conversational search and understanding of longer, natural language queries – a nod to the rise of voice search and user expectation for Google to handle complex questions. Interestingly, Hummingbird’s immediate impact was subtle (users didn’t notice a shock to results like Panda/Penguin) (Google's Hummingbird Update: How It Changed Search), but it set the stage for future advancements (like RankBrain and BERT). With Hummingbird, Google became much better at context and intent. For example, it can understand that when you search “five guys near me” you likely mean the restaurant chain Five Guys, not literally five random guys. It also improved the handling of synonyms and concept matching. SEO implication: writing in natural language and covering topics in context became more important, since Google was less reliant on exact keyword matches and more on overall topic relevance.

- RankBrain – 2015. As mentioned in ranking factors, RankBrain is an AI-based component of Google’s algorithm. It wasn’t a one-time “penalty” style update like Panda or Penguin, but its introduction was a milestone. First revealed publicly in October 2015, RankBrain helps Google understand ambiguous or new queries by matching them to known topics. Initially it was used in a fraction of queries, but later Google said RankBrain is used in almost every query and became one of the top signals. In 2016, Google’s engineers confirmed the “top 3” ranking factors were content, links, and RankBrain (Google Says The Top Three Ranking Factors Are Content, Links & RankBrain). Practically, RankBrain led SEOs to place even more emphasis on satisfying search intent. It’s not something you optimize for with a trick – rather, if a page pleases users (they don’t pogo-stick back to search, they find what they need), RankBrain likely helps that page surface for relevant queries. It also means new or rare queries (15% of queries Google sees each day are new (How AI powers great search results)) can be handled better by finding pages that aren’t exact matches but conceptually fit. RankBrain was Google’s first foray into large-scale machine learning for ranking, opening the door for more AI in search.

- Mobile-Friendly Update (Mobilegeddon) – April 2015. This update (not an animal name!) was significant as a response to the shift toward mobile usage. Google began using mobile friendliness as a ranking signal for mobile searches. Websites that were not mobile-optimized (text too small, content wider than screen, etc.) saw drops in mobile rankings. This was a wake-up call to adopt responsive design or mobile-specific sites. In 2018, Google expanded this with the Speed Update which made mobile page speed a ranking factor for mobile searches. Eventually, as we discussed, mobile-first indexing was implemented. So while not one single update, the 2015-2018 period saw a series of changes positioning mobile user experience as critical for SEO.

- Google BERT – October 2019. BERT stands for Bidirectional Encoder Representations from Transformers – essentially, it’s a deep learning algorithm for natural language processing. Google applied BERT to search in order to better understand the nuance and context of words in longer queries or sentences. At launch, Google said BERT would impact 1 in 10 searches in terms of understanding results better (Welcome BERT: Google’s latest search algorithm to better understand natural language), making it one of the biggest additions to search in years. BERT is particularly good at understanding the intent behind search queries with prepositions or conversational structure. For example, in the query “2021 brazil traveler to usa need a visa,” the word “to” and its relation to the other words are important (is a Brazilian traveling to the USA or vice versa?). BERT helped Google grasp that nuance, where older algorithms might misinterpret it. BERT doesn’t rank pages so much as it helps Google better retrieve and rank the right pages. It’s another step toward Google “understanding language like humans.” For SEOs, BERT reinforced that you cannot game the system with awkward keyword phrasing – writing naturally and clearly is rewarded. If your content is well-structured and addresses specific questions clearly, Google’s NLP can more correctly match it to voice queries and long-tail queries. By late 2020, Google had rolled out BERT in over 70 languages and even said it’s used in essentially all English queries to help understanding ([N] Google now uses BERT on almost every English query – Reddit).

- Core Updates (2017-Present): Google now regularly releases Broad Core Algorithm Updates (often just called core updates) every few months (e.g., “March 2019 Core Update,” “June 2021 Core Update,” etc.). These are general improvements to Google’s ranking systems. Unlike named updates (Panda/Penguin), core updates don’t target one thing in particular, so their impact can be broad. Sites might see drops or gains, often related to content relevance and quality adjustments. Google’s advice for those hit by core updates is to focus on content quality and E-A-T – which suggests these updates further refine how the algorithm assesses content and site trustworthiness. A notable core update was nicknamed the “Medic” Update (August 2018) because it heavily affected health and medical sites, highlighting the importance of expertise and trust in those areas. Core updates remind SEOs that the algorithm is constantly evolving; recovering from a core update downturn often means making substantial site improvements and waiting for the next update to see if those changes are rewarded.

- “Helpful Content” Update – August 2022. This newer update aims to tackle the rise of content written for the algorithm rather than for people. It introduces a site-wide signal that downranks sites with a lot of unhelpful content. This was Google’s answer to clickbait or AI-generated fluff that doesn’t truly help users. It ties into a broader trend: Google increasingly measures content based on user satisfaction. The recommendation is to avoid writing content just to attract search traffic (for example, publishing lots of articles on questions just because they might rank, regardless of expertise). Instead, stay within your area of expertise and provide unique value. This update, along with core updates, enforces that quality over quantity approach.

Each of these updates pushed the SEO industry toward cleaner, user-focused practices. The pattern is clear: tactics that try to shortcut quality (whether by spammy links, thin content, or other gimmicks) get weeded out in the long run. On the other hand, sites that invest in genuinely good content, a great user experience, and legitimate promotion tend to endure and benefit as Google’s algorithms get more sophisticated.

4. Case Studies & Best Practices

Learning from real-world SEO successes and failures is invaluable. Here we look at an example of a successful SEO strategy and distill some best practices that have emerged over time for optimizing a website for Google Search.

SEO Case Study – From 0 to 100,000 Visitors: One notable case study comes from a brand-new website launched without any tricks or prior domain authority. As documented by an SEO agency, the site grew from zero to 100k organic visitors in about 12 months (SEO case study: Zero to 100,000 visitors in 12 months). How? The strategy focused on building a strong foundation of quality content and earning links naturally. In the early stages, they targeted “easy wins” – less competitive, long-tail keywords that they could rank for more quickly to start gaining traffic (SEO case study: Zero to 100,000 visitors in 12 months). They consistently published well-researched articles that provided unique value. At the same time, they engaged in content marketing outreach to secure a few strategic backlinks from relevant websites to their most important pages (SEO case study: Zero to 100,000 visitors in 12 months) (SEO case study: Zero to 100,000 visitors in 12 months). By first capturing those low-hanging fruit keywords and establishing some authority, the site built up credibility in Google’s eyes. Over the year, they progressively targeted higher-volume keywords as the site’s strength grew. The key takeaways were: (a) Patience and a long-term content plan win over “quick SEO hacks”; (b) Earning a handful of high-quality links was more effective than dozens of low-quality ones; and (c) Continually analyzing and iterating on what content resonated with their audience (and by extension, with Google) kept the growth trajectory. This case exemplifies that white-hat SEO strategies – focusing on content and links – can achieve massive growth even starting from scratch.

Another case study on the flip side is recovering from a penalty. For instance, when Google’s Panda update hit sites for thin content, some websites that lost traffic undertook major content overhauls. One site affected by Panda was able to recover by rewriting and improving content on about 100 pages that were low-quality (A Complete Guide to the Google Panda Update: 2011-21). They eliminated duplicate or shallow text and replaced it with more in-depth, useful information. Another site with lots of user-generated profiles (many of which were duplicate bios) encouraged users to provide unique personal info – this significantly reduced duplicate content and improved the site’s perceived quality (A Complete Guide to the Google Panda Update: 2011-21). These examples show that even if a site stumbles, refocusing on quality content can restore SEO performance over time.

SEO Best Practices: While Google’s algorithm is complex, the following best practices have proven effective for most websites. These are “tried-and-tested” strategies that align with Google’s guidelines and help improve visibility:

- Perform Keyword Research & Use Keywords Strategically: Identify the search terms your target audience uses. Tools like Google Keyword Planner or third-party tools can help find relevant keywords and questions. Use those keywords naturally in your content – especially in the title, headings, and early in the body – to signal relevance (10 SEO Best Practices to Help You Rank Higher). Best practice: Focus each page on a specific topic/keyword cluster and avoid keyword stuffing. For example, if you have a page about electric cars, using related terms like “EV charging,” “battery range,” etc., in a natural way can improve the page’s relevance for a range of queries.

- Create High-Quality, Helpful Content: Content truly is king in SEO. Write content that satisfies the user’s intent. Ask, “Does my page answer the query better than other pages out there?” Content should be original, informative, and ideally offer a unique perspective or additional value (like original research, graphics, or expert insights). Google’s documentation and SEO experts repeatedly emphasize making content people-centric. One guide suggests publishing helpful content tailored to your specific audience as one of the best practices, because it makes Google see your site as valuable (10 SEO Best Practices to Help You Rank Higher). This could mean creating comprehensive guides, how-tos, FAQs, videos – whatever format best addresses the topic. Keep content up-to-date as well; freshness can matter for certain topics.

- Optimize Title Tags and Meta Descriptions: Every page should have a unique title tag that clearly describes its content and includes the primary keyword. This is crucial for ranking and for enticing clicks. A good title is usually 50-60 characters and written to attract interest (but avoid clickbait or all-caps, etc.). Meta descriptions should likewise be unique and persuasive, around 150-160 characters, summarizing the page. While not a direct ranking factor, a good meta description can improve CTR, which is beneficial. Think of the title and snippet as your “advertisement” in the SERP – make it count.

- Use Header Tags (H1, H2, H3…) to Structure Content: Organize your content with headings and subheadings. The

<h1>should be the page title (often similar to the title tag). Break up sections with<h2>subheadings for each main point, and maybe<h3>for subsections. This not only improves readability but also gives search engines a better understanding of the content hierarchy (10 SEO Best Practices to Help You Rank Higher). Well-structured content often performs better because users can navigate it easily (and Google may jump to a relevant section or feature a “featured snippet” answer from a well-structured page). - Optimize for Page Experience (Speed & Mobile): Ensure your website loads quickly and is mobile-friendly. Use Google’s PageSpeed Insights to identify speed bottlenecks – common fixes include compressing images, enabling browser caching, and minimizing CSS/JS files. A faster site not only pleases users but also avoids any search ranking penalties for slow performance. Mobile usability is non-negotiable: use responsive design so the same content and URLs serve all devices. Make sure text is readable on small screens and buttons/links are easily tappable. With Core Web Vitals now a ranking factor, aim for good LCP (Largest Contentful Paint), FID (First Input Delay), and CLS (Cumulative Layout Shift) scores (The Future of SEO: Trends to Anticipate in 2025). Google Search Console’s “Page Experience” and “Core Web Vitals” reports help monitor this. In practice, a site that’s fast and mobile-friendly will likely rank higher than an equal-quality site that isn’t.

- Improve Site Structure & Navigation: A clear site architecture helps both users and crawlers. Important pages should be reachable within a few clicks from the homepage. Use logical categories for content. Implement internal linking generously – link related articles to each other, and ensure your top pages (like cornerstone content) have many internal links pointing to them (10 SEO Best Practices to Help You Rank Higher). Internal links act like roads connecting your site’s content, and they pass some ranking signals around. Also, maintain a clean URL structure (user-friendly URLs with keywords, e.g.,

example.com/seo/best-practicesinstead ofexample.com/index.php?id=123). Good navigation (menus, breadcrumbs) and interlinking can also increase the time users spend on your site, exploring multiple pages – a positive engagement signal. - XML Sitemap & Robots.txt: Provide an XML sitemap listing all important URLs – this helps Google discover your pages, especially those not well linked. Submitting it in Google Search Console can ensure Google knows about all parts of your site (10 SEO Best Practices to Help You Rank Higher). Use the

robots.txtfile carefully – disallow only sections that you truly don’t want crawled (e.g., admin pages), but make sure you’re not accidentally blocking important content. (Robots.txt doesn’t remove pages from Google, but it can prevent crawling/indexing if misconfigured.) - Earn Authoritative Backlinks: We touched on this as a ranking factor – it’s also a best practice to have a strategy for acquiring links in a natural way. This could be through content marketing (creating infographics or research that others cite), writing guest posts on relevant industry sites, getting listed in industry directories or review sites, or simply building relationships that lead to backlinks. Avoid spammy link-building services or link exchanges – they do more harm than good post-Penguin. Focus on quality: one link from a well-respected site in your niche is worth more than 100 random links. Also, diversify your anchor text (most links should use your brand name or natural phrases, not always the exact keyword, to avoid looking manipulative).

- Leverage Structured Data: As discussed earlier, adding structured data (schema markup) can make your site eligible for rich results. Identify which schema types suit your content – e.g., Articles, FAQ, Product, Recipe, Organization, etc. Implementing these can enhance how your listing appears (with images, stars, FAQs, etc.), which improves CTR. Since more searches are happening via voice (Google Assistant reads out answers often from structured data/featured snippets), using schema can indirectly help with voice SEO as well. It’s an emerging best practice to include relevant schema on as many pages as possible.

- Monitor Performance & Fix Issues: SEO is iterative. Use Google Search Console and analytics tools to monitor how your site is performing. Search Console will report indexing errors (pages Google had trouble crawling or indexing), mobile usability issues, Core Web Vitals metrics, and even security issues if any. It also shows the keywords you’re ranking for and where you might improve. Regularly audit your site for problems: broken links, 404 errors, duplicate title tags, etc., and fix them (10 SEO Best Practices to Help You Rank Higher). A periodic technical SEO audit (checking things like redirects, canonical tags, hreflang for multilingual sites, etc.) ensures your site stays healthy for Google’s crawlers.

- Stay Within Google’s Guidelines: This might sound obvious, but always adhere to Google’s Webmaster Guidelines. Avoid “black hat” tactics like cloaking (showing different content to Googlebot than to users), hidden text, doorway pages (thin pages just to rank for a keyword and funnel to another page), or any kind of link schemes (Google Launches “Penguin Update” Targeting Webspam In Search Results). Google’s algorithms and manual reviewers are adept at catching these, and penalties can be devastating (and hard to recover from). It’s best practice to focus on long-term, sustainable SEO – which means aligning with Google’s goal of delivering the best, most relevant results to users.

By following these best practices, you build a solid SEO foundation. In essence, maximize what helps users (quality content, fast and easy experience) and minimize anything that frustrates users or search engines. Sites that do this tend to thrive in rankings over time. As one SEO playbook succinctly puts it: Make pages primarily for users, not for search engines, but ensure search engines can easily access and understand those pages. Striking that balance is the heart of effective SEO.

5. Impact of AI & Machine Learning on SEO

Artificial intelligence and machine learning now play a central role in Google’s search algorithms. Google has been integrating AI not only to improve how it ranks results, but also to better understand search queries and content. Key AI-driven systems like RankBrain, BERT, and the newer MUM (Multitask Unified Model) have changed how SEOs approach optimization. Here we explain these systems and how they influence SEO strategy.

RankBrain – Smarter Query Interpretation: RankBrain was Google’s first major foray into using machine learning in search (launched around 2015). As described earlier, RankBrain helps Google interpret queries by associating unfamiliar words or phrases with known concepts (How AI powers great search results). For example, if you search a complex question, RankBrain tries to figure out the meaning behind it and find relevant pages even if they don’t contain the exact query words. This means Google is less reliant on literal keyword matching and more on context and intent. For SEOs, RankBrain’s influence means that writing naturally and covering topics comprehensively is rewarded. You don’t need to create separate pages for every minor keyword variation anymore – Google’s AI can often tell that “how to train a puppy not to bite” is conceptually the same intent as “puppy biting training tips”. Instead of obsessing over each exact keyword, focus on answering the core question and related follow-up questions within your content. Also, because RankBrain is thought to adjust rankings based on user engagement signals (e.g., if users seem to prefer one result over others for a novel query, RankBrain can learn from that), content that truly satisfies users tends to get a boost. There’s no direct optimization “for RankBrain” – it’s more about aligning with user intent and providing value.

BERT – Better Natural Language Understanding: BERT (Bidirectional Encoder Representations from Transformers) is a deep learning model that Google applied to search in 2019 to improve understanding of natural language. BERT is particularly good at understanding the context of words in longer queries or sentences by looking at words before and after them (bidirectionally). For SEO, the arrival of BERT meant Google got much better at handling conversational queries, including voice searches. It can grasp the nuance in queries like “can you get medicine for someone pharmacy” – pre-BERT, Google might have missed the intent (which is about whether you can pick up someone else’s prescription) and just matched keywords like “medicine” and “pharmacy.” Post-BERT, Google understands the context words like “for someone” and provides a more relevant result (Welcome BERT: Google’s latest search algorithm to better understand natural language). BERT also helps Google understand content on pages better. So, if your content is clearly written, Google is more likely to extract the correct meaning. The practical SEO advice in the BERT era is similar to before: write naturally, use words in a clear context. Over-optimized, unnatural phrasing is counterproductive – not only do users dislike it, but Google’s language models might find it confusing or spammy. With BERT, Google can also generate better featured snippets (direct answer boxes) because it can pull answers from deep in a page that truly relate to the nuanced question asked. So a tip: include succinct answers to common questions in your content (which could be picked up as snippets), but also elaborate for depth. The more clearly you answer a question, the easier it is for Google’s AI to identify that answer as helpful.

MUM – The Future of Multimodal Search: In 2021, Google introduced MUM (Multitask Unified Model), an advanced AI system that takes AI in search even further. MUM is touted to be 1000 times more powerful than BERT and is multimodal – meaning it can understand information from different formats together, like text and images (How AI powers great search results) (How AI powers great search results). MUM can also work across languages. While MUM is still in early stages of use, Google has started applying it in specific ways. For example, Google used MUM to improve searches for COVID-19 vaccine information (to better match queries with trusted sources in different languages) (How AI powers great search results). It’s also being used to power features like “Google Lens multisearch,” where a user can take a photo and ask a question about it (combining image and text) (How AI powers great search results). Imagine snapping a picture of a flower and asking “what kind of care does this plant need?” – that’s a multimodal query MUM might handle. Importantly, Google has stated that MUM is not yet used to rank general search results in the way RankBrain, neural matching, or BERT are (How AI powers great search results). But they are exploring its capabilities. In the future, MUM could help answer extremely complex queries that require synthesizing information (the kind of queries that today might require multiple searches). It might also help Google better understand video and audio content.

For SEO, to prepare for MUM and similar AI, we should think beyond just text keywords. Ensure that your images have descriptive alt text and relevant surrounding text (as Google might interpret images in context). Provide transcripts or summaries for video and audio content. Essentially, help the AI understand all elements of your content. Additionally, consider that search is becoming more conversational and multi-faceted – content that addresses topics in depth and anticipates related questions will be well-positioned.

Neural Matching and Other AI Systems: Google has other AI-based systems like Neural Matching (rolled out around 2018) which helps Google understand how queries relate to pages in a broad sense (often described as Google’s “super-synonym” system). Neural matching attempts to match the query with pages that might not have the exact terms but are related at a conceptual level – somewhat akin to RankBrain but applied more to the retrieval phase. These AI systems collectively mean Google is less dependent on simple signals like “this page has the same keywords as the query”, and more on “this page seems to be about the same concept the query is asking for.” The upside is that if you produce truly relevant content, Google can find and rank it even for queries you didn’t specifically target. The downside (for some) is that old shortcuts like exact-match domains or keyword stuffing have lost a lot of their power.

AI for Spam Fighting: Google also uses AI to combat spam. In 2021, they announced an AI-based spam-prevention system that reportedly eliminated a vast amount of spammy results. They also use machine learning in identifying malicious or low-quality pages (e.g., through patterns in content or link spam detection). For SEOs, this means the bar is higher – spun or auto-generated content is more easily caught, and link spam networks are more likely to be devalued automatically.

How AI Influences SEO Strategy:

- Focus on Topics & Entities: With AI understanding context, SEO has shifted to a topic-based approach. Instead of creating one page per keyword variation, it’s often better to create a comprehensive guide that covers a broad topic and related subtopics. Google’s semantic algorithms will pick up the relevance. Think in terms of entities (people, places, things, concepts) and how your content connects those. For example, a site about movies should have content interlinking actors, directors, film titles – establishing those semantic relationships.

- Content Quality & Depth: AI like RankBrain and BERT rewards content that thoroughly answers queries. One piece of advice from SEO experts is to build “topic clusters” – a main pillar page covering a broad topic and supplementary pages that delve into subtopics, all interlinked (The Future of SEO: Trends to Anticipate in 2025). This helps demonstrate depth and authority on a subject, which AI can pick up on by analyzing the content comprehensively. Also, incorporate natural language questions and answers in your content (think FAQ sections) to match voice search queries and featured snippet opportunities.

- Write for Humans (Really): We’ve said it multiple times, but it bears repeating because AI is getting extremely good at reading like a human. If your content is awkward or clearly written for bots, AI will not favor it. Google’s advances in understanding language mean the best practice is to use a clear, conversational tone and explain things as if you’re directly answering a user. This approach tends to naturally include the right keywords and synonyms anyway.

- Structured Data & Feed the AI: Help Google’s AI by structuring your data. Use schema, organize your site, label images with alt text, and use descriptive headings. You want to make it as easy as possible for these algorithms to digest your content. The easier you make their job, the more likely your content will be correctly understood and deemed relevant.

- Monitor AI-driven Search Features: Keep an eye on features like featured snippets, People Also Ask, etc. These are often powered by Google’s understanding of language. If you see Google consistently pulling a snippet from a competitor for a question, analyze how that answer is formatted. Maybe providing a concise answer in a paragraph or a bullet list on your page could snag that snippet. With AI, Google can extract answers even from the middle of your paragraph, so ensure key info isn’t too buried.

- Stay Updated on AI Changes: Google’s use of AI is evolving. For instance, they might expand MUM’s role or introduce new models. SEOs should follow Google Search Central blog and announcements. Adapting content strategy to leverage new AI capabilities (e.g., if Google starts using AI to summarize passages, maybe structuring your content to facilitate summarization could help) will be beneficial. For example, Google’s AI might in the future answer complex multi-part questions by combining info from multiple pages. Ensuring your content is easily “snippetable” or could be one of those pieces is forward-looking SEO.

In summary, AI and machine learning have made Google Search much smarter. It can parse language nuances, understand overall context, and even evaluate quality in more human-like ways. For SEO, this means the old tactics of tricking a dumb algorithm have given way to optimizing for an AI that’s trying to think like a human. The guiding principle is to create content and web experiences that delight humans, because Google’s AI is increasingly modeling its ranking decisions on what seems to best satisfy human searchers (The Future of SEO: Trends to Anticipate in 2025) (The Future of SEO: Trends to Anticipate in 2025). It’s a challenging but exciting era – as AI continues to advance, search will become more about knowledge and context, and less about keywords and hacks.

6. Latest Trends & Future Predictions in SEO

The world of SEO is ever-evolving. New technologies and user behaviors are continually reshaping how search works. In recent years, several trends have emerged strongly – and they give hints of where SEO is headed. Key trends include the rise of voice search, the dominance of mobile-first experiences, and Google’s progression toward richer, AI-driven search results. Here we’ll explore these and other emerging trends, and make some future projections.

Voice Search & Conversational Queries: With the proliferation of voice assistants (Siri, Google Assistant, Alexa) and smart speakers, more people are performing searches by voice. Voice searches are often longer and phrased as questions or natural-language requests. For example, a text search might be “weather NYC tomorrow”, whereas a voice query might be “What’s the weather going to be like in New York City tomorrow?” This shift means content optimized for voice should aim to capture conversational keywords and directly answer questions. It’s reported that the increase in voice search has resulted in more long-tail, conversational queries and thus a need for voice search optimization (The Future of SEO: Trends to Anticipate in 2025). SEO best practices to capture voice queries include: using FAQ pages (Q&A format content), incorporating question keywords (who, what, how, best way to, etc.), and ensuring your site content can be featured in snippets (since voice assistants often read out featured snippets as answers). Also, local voice search is huge (e.g., “near me” queries). Keeping your Google My Business info up-to-date and including natural language in reviews or Q&As can help. As voice search grows, we predict Google will get even better at semantic search – understanding intent and context – so websites that mirror natural speech patterns and directly address common questions will have an edge. In summary, optimize for the way people speak, not just how they type.

Mobile-First Indexing & Mobile UX: We’ve discussed mobile-first indexing – Google now uses the mobile version of content for indexing and ranking. The trend behind this is clear: mobile is the primary platform for search. Therefore, mobile SEO = SEO. Going forward, sites that do not function well on mobile will effectively be invisible on Google. Recent data shows mobile searches continue to outnumber desktop, and Google has fully shifted to mobile-first indexing (Google says mobile-first indexing is complete after almost 7 years). Beyond indexing, user behavior trends show people often start searches on mobile (even if they might continue later on desktop for complex tasks). Emerging mobile trends include the rise of mobile wallets and click-to-call – so if you’re a local business, having phone numbers and tap-to-call buttons is important. Also, ensure any intrusive interstitials (pop-ups) on mobile are minimized – Google penalizes sites with aggressive pop-ups that cover content on mobile. Another trend is Google’s move to continuous scrolling on mobile (replacing the old “page 2” with an endless scroll). This might mean slightly more exposure for content that previously was just beyond the first page. In the future, expect Google to integrate more of the mobile app experience into search – for instance, indexing content from apps or offering instant apps if available. Prediction: As 5G networks expand, mobile users will expect even richer content (videos, AR experiences) quickly. So optimizing for mobile might also include optimizing for new content types like AR (imagine users searching for how furniture would look in their room – AR search results could show that). For now, the actionable insight is: make mobile UX seamless – fast loading, easy navigation, accessible content – as mobile-first is now simply the norm.

“Google as an Answer Engine” & Zero-Click Searches: Over the past few years, Google has transformed from a search engine that directs you to other sites into an answer engine that often provides answers directly on the results page. We see this with featured snippets, Knowledge Graph panels, weather widgets, stock prices, unit converters, and so on. A large percentage of searches now result in “zero clicks” – the user gets what they need from the SERP itself without clicking through. This is not exactly an SEO-friendly trend, as it can reduce traffic (e.g., if someone just needed a quick fact). However, it means SEO strategy must account for on-SERP optimization. This could mean structuring your content to become that featured snippet or answer. For instance, if you provide a clear step-by-step list for “how to boil an egg,” you increase the chances Google uses your content in a snippet (and possibly attributes your site). Even if the user doesn’t click, it builds brand visibility and credibility. Also, consider optimizing for rich results like People Also Ask (PAA) questions. If you identify common PAAs relevant to your content, you can include those questions and answers on your site to appear there. The Knowledge Graph is another aspect – ensure your organization has a strong presence online so that Google’s knowledge panel (if one appears for your brand) has accurate info (you can influence this by using schema markup for Organization, linking Wikipedia, etc.). The overall trend is Google is trying to reduce the steps for a user to get information. SEO in the future will need to balance driving clicks with providing value directly on Google. Businesses might need to adapt by treating Google’s results page as an extension of their brand experience (for example, by managing how their content appears in those rich results).

Visual Search & Multi-Modal SEO: As mentioned with MUM, search is becoming more visual and multi-modal. Google Lens allows users to search using their camera – pointing at an object to identify it or find similar products. Pinterest and other platforms have also popularized visual search. For e-commerce and local businesses, this trend is significant. It means you should optimize images not just with alt text, but also by providing high-quality, relevant imagery that Google can understand. Using descriptive file names and possibly schema (like ImageObject) can help. We foresee a future where users might take a picture of a plant and search “how to care for this” – and Google will combine image recognition with text search (something they’ve started testing with Lens’s “Multisearch”). To capture this, ensure your images are relevant and maybe include small text captions that associate the image with certain keywords (for example, an image of a pothos plant with a caption “Pothos indoor plant”). Additionally, video SEO is on the rise. Google shows key moment clips for YouTube videos in search results. Using structured data like VideoObject and providing transcripts can help videos be better indexed and shown for search queries. As bandwidth and content preferences shift, more users may prefer a quick video answer – so incorporating video content (and optimizing it for search) could be crucial.

Core Web Vitals & User Experience Continues to Matter: Google’s Page Experience update (Core Web Vitals) was one clear instance of Google putting user experience metrics into the algorithm. We expect Google will continue to refine these metrics. For example, in the future they might incorporate responsiveness to user input as an even stronger factor, or introduce new metrics for smoothness of experience. While Core Web Vitals currently might not drastically shuffle rankings (content relevance still outweighs them), the trend is that performance and UX are part of SEO now. It’s wise to treat SEO as not just “search engine optimization” but “search experience optimization” – making your result the most appealing and satisfying option for users. This could even extend to things like accessibility (sites that are more accessible might indirectly rank better because more users can successfully use them, lowering bounce rates).

Local Search & Hyper-Local Targeting: Local SEO has seen changes with things like the Possum update and the increasing importance of Google Maps results. A trend is Google showing more Map packs and local snippets even for queries that aren’t explicitly local (Google interprets implicit local intent). Businesses should ensure consistent NAP (Name, Address, Phone) across the web, gather Google reviews, and use the Google Business Profile features (posting updates, Q&A, etc.). The future might bring more personalized local results – e.g., factoring in not just your city but your neighborhood, or your personal preferences (if you always go to coffee shops that are open late, Google might rank those higher for you).

E-A-T and Fighting Misinformation: Given the information ecosystem now (and issues like misinformation), Google is placing more emphasis on authoritative content. We saw this with the Medic update and subsequent core updates – sites with better E-A-T tend to outperform in YMYL niches. Going forward, one might expect Google to incorporate more signals of authority (perhaps even author-level authority if they can track that). They are already using something called the “Knowledge-Based Trust” in some verticals – basically checking content against trusted databases. SEOs should thus continue to build content that is trustworthy: cite sources, have author bios with credentials, secure your site (HTTPS), and possibly get relevant accreditations (for instance, a medical site linking to profiles of its medically certified staff).

AI-Generated Content & Google’s Stance: With the rise of AI writing tools (GPT-3 and beyond), there’s a trend of auto-generated content. Google’s helpful content update was in part a reaction to this – to ensure the search results aren’t flooded with soulless machine-written text. Google has indicated that AI content is not against guidelines per se if it’s useful and created to help users, but content generated just to game search rankings is (and they have algorithms to target that). This sets the stage for an interesting future: we might see AI tools become part of the content workflow (for suggestions, outlines, etc.), but the human element of adding originality and experience will remain important. Likely, Google will get better at detecting pure AI content and evaluating the value it provides. So, the trend is to use AI as a helper, not a crutch – and always review and improve AI-generated material by human editors.

Search Evolves (SGE and Beyond): As a futuristic note, Google is experimenting with SGE (Search Generative Experience), which integrates generative AI directly in search results (like a AI summary answer for your query, with citations). If this becomes a regular feature, SEO might partly shift towards ensuring you’re one of the cited sources in AI summaries. Early tests show that well-respected content gets cited. This means those with strong SEO (high authority and relevance) become the sources that the AI pulls from. It’s a bit speculative, but SEO could involve optimizing for AI answers by structuring content in a way that’s easily extractable and by being considered authoritative enough to be chosen by the AI. It’s a trend to watch – for now, it’s limited, but it signals Google’s direction to combine AI with search more deeply.

Emergence of New Search Platforms: While Google remains dominant, keep an eye on other ecosystems – e.g., Bing (with OpenAI’s ChatGPT integration), Amazon (for product search SEO), YouTube (video SEO is basically search optimization within YouTube). Diversifying SEO strategy to consider these is wise, though Google will likely incorporate similar advances.

Future Predictions: In summary, future SEO will likely be characterized by even more integration of AI (for personalized and context-heavy queries), more rich and instant answers (possibly fewer clicks), and the continued need to deliver genuine value. Content will remain central, but the form might change – we might optimize more for answer engines, voice interactions, or AR/VR search interfaces. Technical SEO will still matter as sites adopt new tech (e.g., Web3 or progressive web apps, ensuring those are crawlable). And user intent will always guide the evolution: if user behavior shifts (say, people start preferring interactive results), Google will adapt.

To prepare, SEOs should stay agile: keep learning, test new formats, and above all, focus on the user’s needs. As Google’s Danny Sullivan often says, “We update our algorithms to present more relevant results” – so if you keep your aim on being the most relevant, authoritative result for your topic, you’ll be well-positioned no matter what changes come (How AI powers great search results) (How AI powers great search results).

References:

- Google Search Central Documentation and Blog for crawling, indexing, and ranking fundamentals (How Search Engines Work: Crawling, Indexing, Ranking, & More) (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget)

- Search Engine Land / Journal reports on algorithm updates (Panda, Penguin, BERT, etc.) (A Complete Guide to the Google Panda Update: 2011-21) (Google Launches “Penguin Update” Targeting Webspam In Search Results) (Welcome BERT: Google’s latest search algorithm to better understand natural language)

- Google’s official blog and statements on AI in search (RankBrain, BERT, MUM) (How AI powers great search results) (How AI powers great search results)

- SEO case studies and expert guides illustrating best practices and results (SEO case study: Zero to 100,000 visitors in 12 months) (10 SEO Best Practices to Help You Rank Higher)

- Industry resources on technical SEO topics like crawl budget and structured data (Google Explains Crawl Rate & Crawl Demand Make Up Crawl Budget) (Structured data and SEO: What you need to know in 2025)